Reasons to worry about AGI

We can't even reason about the problem, because humans are bad at reasoning about exponentials; even if we could, we wouldn't worry about it (and the folks closest to the problem are least likely to worry); and even if we did worry, we probably wouldn't be unable to do anything about it.

I'm getting very worried about AGI, for a few reasons:

- We're ahead of even the most optimistic timelines — what's happening with transformers is taking everyone by surprise.

- Humans are famously bad at dealing with exponentials (we saw how this played out with covid), and there is no fire alarm for AGI.

- The folks closest to the problem are the least able to worry.

I was chatting with a researcher from OpenAI the other day who told me he intellectually understood AGI risks, but couldn't feel the fear emotionally, because he was so close to the problem and saw how hard it was to get these models to do anything.

That seemed to me like an Inuit who couldn't worry about global warming because "it's still so darn cold around here."

Another reason why these folks can't worry is because, in this researcher's own words, they're "caught in a testosterone-fueled race to the biggest models against other teams." - One step removed from these AI researchers, the tech community at large seems stuck in mood affiliation — everyone wants to be a tech optimist, and reasons backwards from that mood instead of forward from first principles.

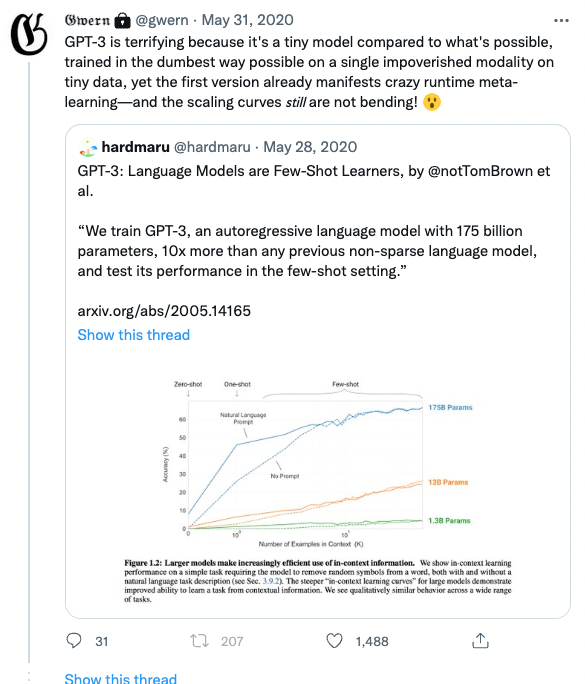

This is exemplified in ubiquitous talks that "AI is just a tool." But we've never had this kind of tool before, or anything close; and chainsaws or bombs are tools too — tools blow up in our faces all the time. - For the first time, we have a model that shows generality (we just threw a bunch of internet text at it, and now it can code, summarize, translate, write poetry, and even do math), and the scaling curves simply don't bend:

So, to summarize: we can't even reason about the problem, because humans are bad at reasoning about exponentials; even if we could, we wouldn't worry about it (and the folks closest to the problem are least likely to worry); and even if we did worry, we probably wouldn't be unable to do anything about it.

The only thing that gives me solace is that, historically, new life forms don't extinguish previous ones. Lizards are still around, and so are cyanobacteria. But I remain worried, and think AGI risk is just as underfunded as pandemic preparation was before covid.

Flo Crivello Newsletter

Join the newsletter to receive the latest updates in your inbox.