Heroes on the Shoulders of Giants

Epistemic status: I’m outside of my circle of competence when it comes to AI. That said, most of this post is just logical reasoning, independent of AI-specific knowledge.

François Chollet (whom you should definitely follow on Twitter) writes about “The Impossibility of Intelligence Explosion.” Here’s his TL;DR, although I’d encourage you to go read the whole thing:

- Intelligence is situational — there is no such thing as general intelligence. Your brain is one piece in a broader system which includes your body, your environment, other humans, and culture as a whole.

- […] Currently, our environment, not our brain, is acting as the bottleneck to our intelligence.

- Human intelligence is largely externalized, contained not in our brain but in our civilization. We are our tools — our brains are modules in a cognitive system much larger than ourselves. A system that is already self-improving, and has been for a long time.

- Recursively self-improving systems, because of contingent bottlenecks, diminishing returns, and counter-reactions […], cannot achieve exponential progress in practice. Empirically, they tend to display linear or sigmoidal improvement. […]

- Recursive intelligence expansion is already happening — at the level of our civilization. It will keep happening in the age of AI, and it progresses at a roughly linear pace.

I think the piece makes a couple of brilliant points — I love the idea of “civilization as an exocortex” — but I disagree with some of those points, or think they still allow for the possibility of a future intelligence explosion. Here’s my take on this.

1. We’ve already gone exponential

François Chollet writes:

[Y]ou may ask, isn’t civilization itself the runaway self-improving brain? Is our civilizational intelligence exploding? No. Crucially, the civilization-level intelligence-improving loop has only resulted in measurably linear progress in our problem-solving abilities over time. Not an explosion.

I disagree, and think that yes, we’ve already been going exponential, by a lot of metrics.

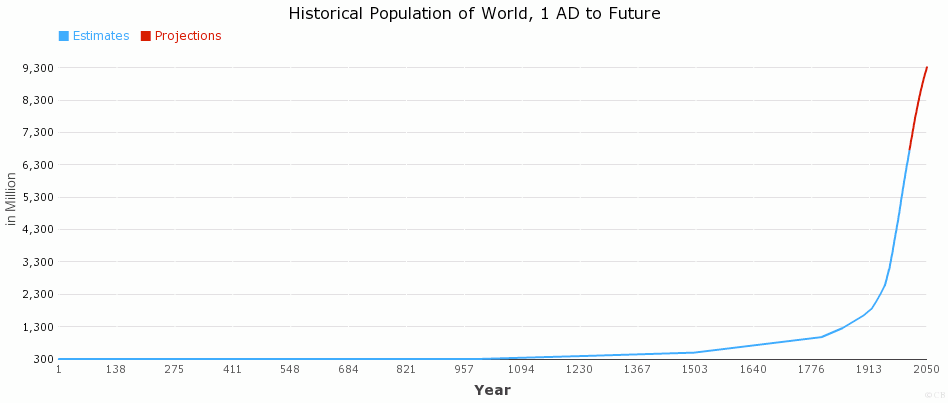

This is the historical population of the world (although some say that this should soon start looking like an S-curve, the world having already reached peak child):

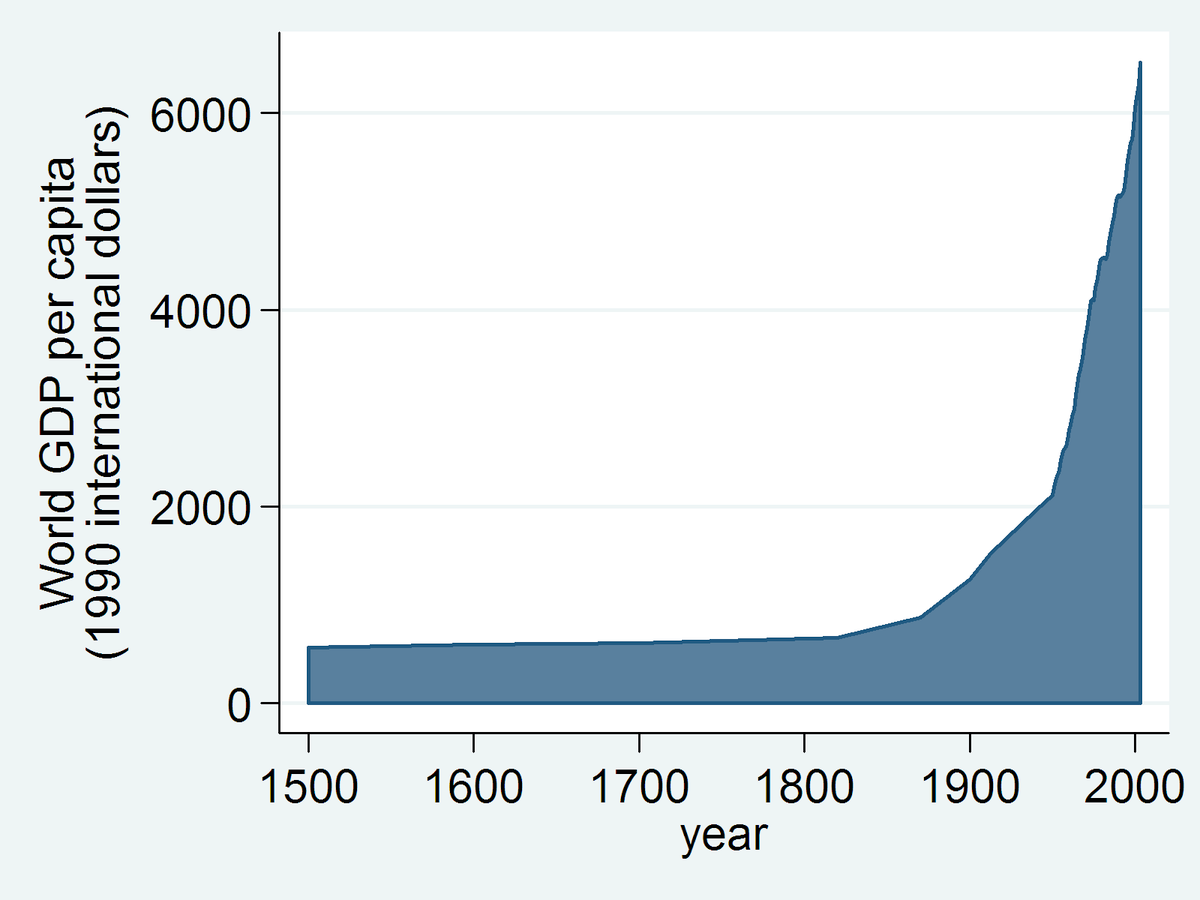

Now, here’s the World GDP (or GWP) per capita across history, in 1990 dollars (from Wikipedia’s “World Economy” article):

Remember, this is per capita, so the curve represents growth in productivity itself, rather than in population. And this is over a very short time span! 500 years isn’t much compared to the ~10,000 years that human civilization’s been around for.

And this is limited to humans, who, in the grand scheme of the Universe, are an extremely recent phenomenon. You may already have heard of Carl Sagan’s “Cosmic Calendar,” compressing the Universe’s chronology from 13.8 billion down to a single year. On this timeline, life doesn’t appear until September 21st, multicellular organisms December 5th, and anatomically modern humans before December 31st, 11:52PM. Agriculture, which many historians consider the beginning of human civilization, doesn’t start until 11:59PM, and 32 seconds.

In his article on “quantitative macrohistory,” Luke Muehlhauser writes:

[M]y one-sentence summary of recorded human history is this: Everything was awful for a very long time, and then the industrial revolution happened.

This industrial revolution, on the “cosmic calendar”, doesn’t start until 570 milliseconds before midnight.

So, sure, if you look at it on the timescale of a single human, things are moving kind of slowly. But, remember: it’s a miracle we’re seeing anything move at all! For most of human history, one could have time-traveled 100 years into the future and been able to function normally, barely noticing any difference (except for the way those punks are now tying their hair with 2 bones instead of 3).

To wrap this point up: on the grand timescale of the Universe, nothing happened for a very long time, and then all of a sudden, life developed. From this point already, staying on the scale of the Universe makes it hard to tell events apart, so we need to zoom into this thin sliver. There, we can see, again, not much happen, and then all of a sudden, mammals develop, including apes. Zooming further, not much happening, and then human civilization! Zooming in, not much, and then industrial revolution!

You get the idea: not only do I think the singularity can happen, I think we’re already living in it.

If there ever is a computational singularity, where nanoseconds will be to those machines what millennia are to us, they’ll summarize not only human history, but even our modern times, as “nothing happened, and then there was the seed AI”. Our “can you imagine Earth was around for 600 million years before any life developed?” will be their “can you imagine they used to sleep for 8 hours a day?”, 8 hours being as unfathomable a duration to them, as 600 million years are to us.

(I realize I’m grossly anthropomorphizing what would actually be completely alien entities, but it’s fun, and you get my point.)

2. Individuals are the ones pushing things forward

Chollet writes: (emphasis mine)

An individual human is pretty much useless on its own — again, humans are just bipedal apes. It’s a collective accumulation of knowledge and external systems over thousands of years — what we call “civilization” — that has elevated us above our animal nature. When a scientist makes a breakthrough, the thought processes they are running in their brain are just a small part of the equation — the researcher offloads large extents of the problem-solving process to computers, to other researchers, to paper notes, to mathematical notation, etc. And they are only able to succeed because they are standing on the shoulder of giants — their own work is but one last subroutine in a problem-solving process that spans decades and thousands of individuals. Their own individual cognitive work may not be much more significant to the whole process than the work of a single transistor on a chip.

Those “standing on the shoulders of giants” points never sit quite right with me, especially when it’s used to de-emphasize the importance of individuals. It’s true, to some extent: we all leverage the progress civilization has made before us, so that we can move it forward ourselves.

But to the extent we’re all standing on those giants’ shoulders, why do so few of us achieve anything noteworthy? Is it only about luck and circumstances, aka “being in the right place, at the right time”?

I think circumstances matter, but they’re not the main factor. Again, at the time when scientists made some of the greatest discoveries, many people were arguably in the right circumstances, indeed, often much better than the ones of the researcher who ended up making the breakthrough. Einstein is the canonical example: how come this clerk, stuck in some patent office in Switzerland, was the one who discovered special relativity, when so many others had tenure, with more time to think about that stuff than him, access to more content, more brains to pick from and have fascinating conversations with, and sometimes with entire teams and laboratories at their disposal?

Newton, too, had his famous “annus mirabilis”, when he discovered the laws of gravity, and differential and integral calculus, while trapped at his parents’ place in the countryside, to avoid a plague epidemic.

If anything, it sometimes seems like the only way to have any impact is to know when to stop shoulders-climbing, and start working on your own thing, like the researchers in those two examples did. After all, those shoulders know no top: you could dedicate your entire life to a single field, without having exhausted the discoveries that have been made in it. In the words of Richard Hamming:

There was a fellow at Bell Labs, a very, very, smart guy. He was always in the library; he read everything. If you wanted references, you went to him and he gave you all kinds of references. But in the middle of forming these theories, I formed a proposition: there would be no effect named after him in the long run. He is now retired from Bell Labs and is an Adjunct Professor. He was very valuable; I’m not questioning that. He wrote some very good Physical Review articles; but there’s no effect named after him because he read too much.

I agree the work civilization has done before you matters, but the importance of individuals still seems under-appreciated by most people. It takes only one person, sailing further than others did, to push the frontier forward for everybody else. In this sense, civilization actually moves as fast as its fastest member. We only need one Columbus to unlock access to a whole new continent, one Einstein to get the insights required to develop lasers and nuclear power, one Darwin to gain a new understanding of life.

(As an aside, this “bias towards action”, required to have any impact, appears to me as one of the greatest strengths of Americans. It is so easy to lose oneself in a pointless hoarding of knowledge, and be frozen into inaction by “what ifs” and “things we could have missed”. Americans, with their “shoot first, ask questions after” ethos, seem to appreciate that one should start doing something before they completely understand it.)

3. Brains are the bottleneck

François Chollet continues:

[T]he current bottleneck to problem-solving, to expressed intelligence, is not latent cognitive ability itself. The bottleneck is our circumstances. Our environment, which determines how our intelligence manifests itself, puts a hard limit on what we can do with our brains — on how intelligent we can grow up to be, on how effectively we can leverage the intelligence that we develop, on what problems we can solve. All evidence points to the fact that our current environment, much like past environments over the previous 200,000 years of human history and prehistory, does not allow high-intelligence individuals to fully develop and utilize their cognitive potential.

I agree with this, except when it comes to the frontier. If you broadly define intelligence as the ability for an entity to control its environment then, by and large, one’s intelligence sits outside their brain: 99% of one’s ability to control their environment is dependent on the ticket they pulled in the lottery of “in what century will you be born”, with less than 1% determined by their IQ.

But that civilization was, in the first place, built and shaped by individuals, just as much as they were in turn shaped by it. When it comes to pushing the frontier, and redefine what the next centuries will mean for the generations living them, the bottleneck lies in these individuals. Here, I echo Peter Thiel’s rejection of technological determinism, according to which “progress will happen, no matter what.” For better or for worse, I think that, regardless of the current cultural momentum, the fate of the world still lies in the hands of individuals, and the actions and decisions they’ll make.

From a more concrete and “micro” standpoint, ask entrepreneurs what’s the current bottleneck holding their companies’ growth back. Some will say it’s market adoption, or capital, proving Chollet right in his emphasis on contextual factors. But in AI, almost all will say it’s the brains: there just isn’t enough talent to go around, and articles abound about the piles of money they’ll throw at the good ones when they find some, with commonplace 7-figures packages.

Like Charlie Munger says:

I like artificial intelligence, because we’re so short of the real thing.

4. AI scales in a way humans can’t

Still from Chollet’s post:

An overwhelming amount of evidence points to this simple fact: a single human brain, on its own, is not capable of designing a greater intelligence than itself. This is a purely empirical statement: out of billions of human brains that have come and gone, none has done so. Clearly, the intelligence of a single human, over a single lifetime, cannot design intelligence, or else, over billions of trials, it would have already occurred.

But of course, everything had never happened, until it happened for the first time. You could equally have said “it’s an empirical observation that no human can run 100 meters in less than 10 seconds, since nobody’s ever done it before”, until Usain Bolt showed up.

Chollet continues:

Will the superhuman AIs of the future, developed collectively over centuries, have the capability to develop AI greater than themselves? No, no more than any of us can.

But once we’ve got that Seed AI, nobody’s talking of making only one of it. The thing with artificial intelligence is that, unlike its natural counterpart, it can actually scale, horizontally as well as vertically. When AlphaGo won its matches against world-champion Lee Sedol, many were quick to point out that:

The distributed AlphaGo system uses about 1 megawatt, compared to only 20 watts used by the human brain.

Which, according to those observers, makes the comparison unfair. However, like Benedict Evans says, “unfair comparisons are the most important kind.” The game doesn’t care how energy-efficient you are: you either win, or you lose. If anything, this seems to earn AlphaGo more points: in AI, once we find something that works, we can just throw a bunch of chips and megawatts at it, and watch it become even better. It’s often pointed out that the technologies behind today’s AI renaissance, like gradient descent, convolutional nets or deep learning, had actually been around for a long time, and just started becoming effective once we had enough computing power to feed them.

On the other hand, how do you think 50,000 humans, consuming a total of 1 megawatts, would have performed against a single AlphaGo? The closest thing I can think of is the Twitch Plays Pokemon experiment, where 1.2 million players took 394 hours to complete a game that a single kid can complete in about 25 hours.

(For the record: I don’t think all we need for the singularity to happen is more computing power. We probably need a lot more theoretical breakthroughs to get there. All I’m saying is: once we’ll have made those breakthroughs, and built this “seed AI”, we’ll be able to flip a switch, and get a million more of it, something you can’t do when you find a really smart human.)

To summarize:

- Looking at history over a large enough time scale, it’s clear the complexity of the Universe is already exploding exponentially—from subatomic particles, to atoms, to molecules, to life, to organisms, to civilization. An intelligence explosion would sit in the continuity of this phenomenon.

- Individuals are the ones pushing civilization forward, although they’re also leveraging the progress it’s made before them. Civilization tends to move as fast as its fastest member, since it only takes one of these individuals to push the frontier forward for everybody else.

- Hence, the quantity, and especially quality, of available brains are the bottlenecks standing in the way of progress…

- … and the first AI to surpass human intelligence would lift those bottlenecks, unlocking an intelligence explosion.

Thanks to Dan Wang and Kevin Simler for reviewing the draft and providing suggestions.

Flo Crivello Newsletter

Join the newsletter to receive the latest updates in your inbox.